Case Study

An App that Would Revolutionize an Industry.

Client: LDARtools

Project: LDAR App, Legacy Update, and a Compliance Platform

LDARtools was determined to create a better leak detection monitoring and compliance platform.

Existing platforms were written with dated technologies, failed to produce reproducible results and even relied on a poorly extended version of an application used to monitor fire extinguishers.

A contemporary, powerful, flexible and full-featured app.

overview

Overview

Since 2007, LDARtools has worked to become the innovation leader in their industry of Leak Detection and Repair compliance. Building upon decades of in-the-trenches experience of founder, Rex Moses, this family-run company has built its reputation on providing solid LDAR hardware and software solutions built upon the latest technology.

Art+Logic was hired to create a small app that would organize the routes of in-field technicians.

In 2013, Art+Logic was hired to create a small app that would organize the routes of in-field technicians surveying every joint, valve, and fixture at a massive processing location.

Later that year, LDARtools asked Art+Logic to hop on a legacy project that was in need of debugging and refactoring.

Then, in 2015, LDARtools contracted with Art+Logic to build out a massive compliance platform: Chateau.

goals +

objectives

Goals + Ojectives

When we began the project, the client was determined to create a better LDAR monitoring and compliance platform. The monitoring of fugitive emissions is a very labor-intensive, audit-prone, and expensive part of any oil, chemical, natural gas, or other processing and manufacturing company. Remaining compliant with local, state, and federal EPA mandates is necessary to avoid expensive fines and disruptions to production and to ensure the air we breathe remains safe. However, at the time, people looking for a monitoring platform could choose from only two very poor solutions. For all the importance placed on LDAR, the platforms available were written with dated technologies, failed to produce reproducible results, and, in one case, relied on a poorly extended version of an application originally used to monitor fire extinguishers. The client had a vision for a contemporary, powerful, flexible, and full-featured app that would tie together all aspects of LDAR — from building out and applying new rules to preparing government-ready reports, to tracking and approving repairs, to organizing teams to run onsite analysis and scheduling them so that a user was ensured that adequate monitoring could be completed by the staff on hand at the appropriate times.

At Art+Logic, we often hear that an app would “revolutionize” an industry, and, many times, that’s hyperbole. In this case, however, this project would actually do just that.

Leaking equipment, such as valves, pumps, and connectors, are a large source of emissions of volatile organic compounds (VOCs) and volatile hazardous air pollutants (VHAPs)

The Leak Detection and Repair: A Best Practices Guide – is intended for use by regulated entities, such as petroleum refineries and chemical manufacturing facilities, as well as compliance inspectors. The guide details some of the problems identified with leak detection and repair (LDAR) programs. It focuses on Method 21 requirements and describes the practices that can be used to increase the effectiveness of an LDAR program.

In 1999, the EPA estimated that noncompliance on fugitive emissions released an additional 40,000 tonnes of VOCs into the air.

philosophy

The App Development Philosophy

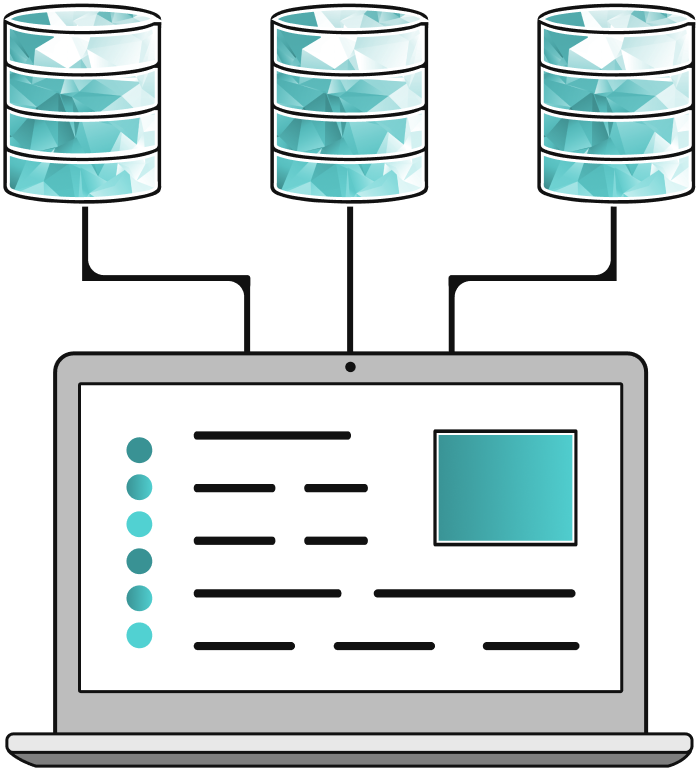

Data-Driven System

The data is supreme; everything else follows the data. Whenever data design and code design ideas are at odds, by default, data wins. Object orientation was not maximized to its full potential but rather was honed to facilitate data management, especially via the ORM capabilities of the Entity Framework. Object-oriented design was employed more fully in the UI.

Rule-Based System

Many activities, from data entry to scheduling inspections, are affected by rules. Although some simpler aspects of rules could be represented in the database, there are some aspects that could not or should not be evaluated in the database. This meant that the Business Engine became the centerpiece of the Chateau system.

Complex rules are evaluated in Workflow Foundation (WF) activities. Each activity processes a specific part of the rules. After the end of a flow, results are saved back to the database. These results are later used as inputs of other flows.

Given the requirement of high flexibility in the representation and configuration of rules, overly-rigid data structures would not suffice. We chose to represent the semi-structured data of rules in XML. This choice facilitated the configuration of rules in the Builder app. At the same time, we employed schema validation to ensure that rules met a minimal standard before being parsed and saved to the database.

Service-Oriented System

Due to the importance of complex business rules, the Business Engine must not be bypassed; therefore, users must not access the database directly. Instead, all data access is performed via services. Similarly, third-party developers should not access the database directly. Instead, third-party developers will create service clients.

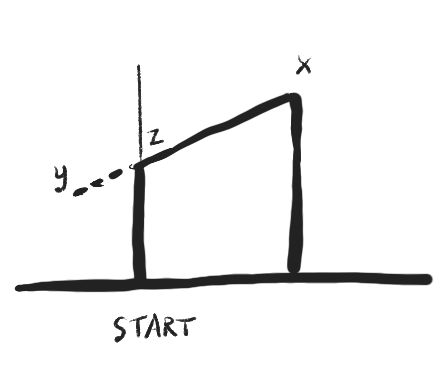

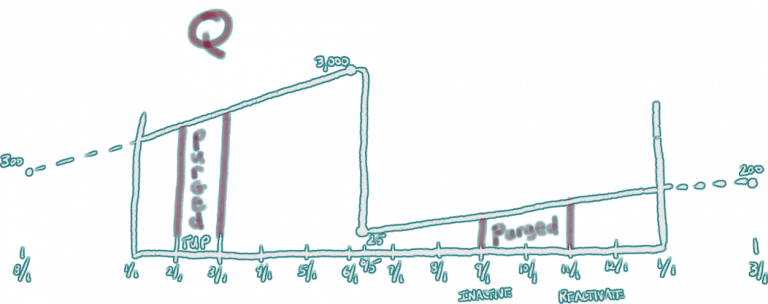

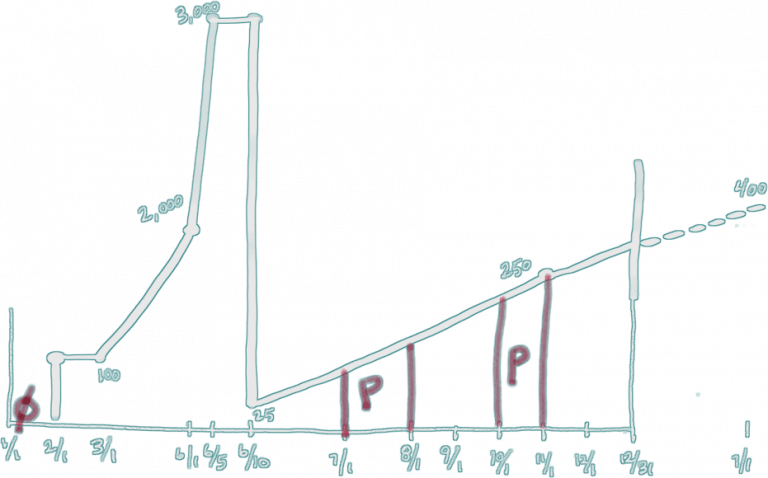

Image of graph sketch

Strictly speaking, there is no need to provide libraries to third-party developers. Developers could use service discovery alone to generate proxy classes. However, as a courtesy to .NET developers, it is possible to provide a library of all the data model classes, which would save them the trouble of generating proxies.

Communication with the Business Engine is done mostly via Windows Communication Foundation (WCF) service methods. WCF supports many bindings. For improved speed and security, we chose Net.TCP by default. In some cases, namely, file transfers, we use other protocols such as HTTP.

Most service methods use plain-old C# objects (POCOs) defined as data transfer objects (DTOs). In cases where variable or semi-structured data is desired (e.g. to populate a data grid with variable columns), methods return DataSets. Most update methods return the updated object; this serves as a confirmation of the update.

Using WCF, exceptions thrown by the Business Engine are turned into faults sent to the client. This usually provides more information than other error reporting mechanisms. Exceptions are thrown whenever unexpected behavior occurs. This includes a failure to update data or a failure to find a unique item.

dba

DBA: Design, Reporting and ETL

Chateau was in need of a reporting system that would allow for rapid development, easy conversion of reports in a variety of formats, easy integration with the larger Windows Presentation Foundation application, and would also provide the ability to serve reports via web browser, thus not requiring any client application to be installed in order to retrieve reports. SQL Server Reporting services was a great fit for this. Not only were Art+Logic developers able to create a wide range of reports which were a strong selling point for Chateau, but we were able to quickly train some LDARtools personnel on how to use some of the client tools to develop reports themselves. LTI’s clients have, in many cases, years of historical data in legacy LDAR systems. Some clients may have relatively small plants with small numbers of components; others will range into the tens of thousands. LTI would not be able to make a strong sales case for Chateau if there was no possibility of loading this legacy data into Chateau.

Loading this data, however, encountered a number of serious obstacles.

Obstacle #1

Critical descriptors of legacy data such as component type or component repair methods are not standardized and mismatches exist between legacy data and Chateau.

Solution

An interface was created to allow the subject matter experts at LDARtools to analyze component type differences. This data was used to build a translation table which was integrated with the data loading process.

Obstacle #2

Some data in Legacy systems was free-form text data, with numerous alternate spellings, abbreviations, and other artifacts.

Solution

Candidates for duplicate data were extracted, presented to the client for approval, and then eliminated in the source queries extracting data from legacy systems.

Obstacle #3

Legacy systems store edit histories of data in a manner that is difficult to extract such that it’s compatible with the Chateau data mode.

Solution

We were able to use T-SQL’s window functions to analyze the legacy historical data in a way that isolated and tracked changes to each individual field, even though the legacy systems tracked all fields changes as a single record.

challenges

Challenges

Technical

Building a highly flexible and complex app while maintaining a high degree of data and reporting compliance. Competitor applications required users to run reports multiple times to triangulate a rough result. This rough result meant that some companies were over-monitoring and wasting money or under monitoring and exposing themselves to expensive EPA fines.

Previous applications required users to update their application by installing updated disks which was a scaling nightmare. We needed to build a Rule Building Engine which would allow users to define rules relative to their local, state, and federal guidelines per their industry WHILE ensuring that the rules they created mapped to the application and created reproducible and reliable reports.

Business

By far, the largest challenge on this project was that of extracting requirements and extracting them fast enough to run several simultaneous milestone development efforts. The client is an industry domain expert, having worked in the LDAR field for decades. Much of the project requirements had to be extracted, organized, and transformed to project specifications from the vast knowledge base that existed in Rex Moses’s head: He knew the current workflows as well as where other creaky competitor’s applications failed or fell short to really provide today’s users with the information they needed. Although Rex and his company did document the features of the application, the many nuanced relationships of those features and the data that sat at the heart of them, needed to be teased apart one thread at a time.

approach

PM Approach: Running Multiple Milestones at Once

For the size and ambition of the project, the Chateau team started out small with only two lead developers, a designer, PM, and QA. This reflected the team’s initial focus on learning the client’s vision for Chateau intimately, understanding the problem domain, and building a detailed backlog and initial sequencing plan for the project.

This phase lasted through several four-week milestones and included the development of prototypes and proof-of-concept code for key areas of business logic as well as fleshing out the UI design. Though it is tempting to shortcut this phase of a project and dive into doing “real” development right away, taking the time to get a deep understanding of the project at the start saves time and cost in the long run, particularly for a more complex problem domain.

The first phase of Chateau culminated in a goal for an alpha release. This included both identifying the approximate minimum features necessary for alpha as well as a limited business environment where alpha testing would occur. The client identified schedule as the key driver for them, so Art+Logic scaled the team up heavily for the push to alpha.

Rule-Based System

Many activities, from data entry to scheduling inspections, are affected by rules. Although some simpler aspects of rules could be represented in the database, there are some aspects that could not or should not be evaluated in the database. This meant that the Business Engine became the centerpiece of the Chateau system.

Complex rules are evaluated in Workflow Foundation (WF) activities. Each activity processes a specific part of the rules. After the end of a flow, results are saved back to the database. These results are later used as inputs of other flows.

Given the requirement of high flexibility in the representation and configuration of rules, overly-rigid data structures would not suffice. We chose to represent the semi-structured data of rules in XML. This choice facilitated the configuration of rules in the Builder app. At the same time, we employed schema validation to ensure that rules met a minimal standard before being parsed and saved to the database.

It’s worth noting that this isn’t the “mythical man-month” approach to making a project go faster: just add more developers. Instead, the scaling up of the team was driven by Art+Logic and the client being able to create requirements for development at a more rapid pace as their mutual understanding of the project matured.

results

Results

After digesting the results of alpha, we entered a phase of steady development balancing enhancing existing features to cope with environmental complexities beyond alpha and adding new features. Priorities changed when a regulatory change in the client’s business domain prompted a new business opportunity for them. This resulted in a change in direction and sequencing of the project for several milestones to add a new feature set to address that new opportunity. Then it all ends with beta testing and final release.

New Website and Branding

contact us

Generally, we are able to respond to inquiries within one business day.

If you are interested in working for Art+Logic, please visit our careers page.