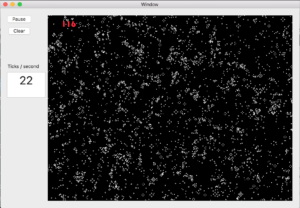

Game of Life showing a 1280×1280 world.

Long ago I gave some off the cuff, first impressions about Swift. Since then there have been several upgrades to the language, but I have only recently found myself trying it out. Specifically, I decided I had the time and inclination to implement Conway’s Game of Life. I wanted to try Swift on as a language, see how hard it makes me work to get to the bytes, and see how efficient I could make my code. Life’s matrix of cells provides a good substitute for the kind of byte by byte programming I often need to do and gave easy measurement opportunities by timing the updates of the life matrix, AKA ticks. What did I learn?

So I learned that the language is fine, and accessing the ACTUAL bytes in memory is as simple as UnsafePointer(myObject). That pointer type even supports pointer arithmetic. After the compiler helped me out with unwrapping issues, I was [ps2id url=’#Mostly’ offset=”]mostly*[/ps2id] okay with Swift.

What about writing fast code? I mean the claim is right there in the name as well as the WWDC slides from its introduction. It actually did produce a fast binary. The baseline implementation was very much limited by the calculation of the next world. After optimizing, the bottleneck was only drawing the world to the screen. I didn’t write any implementation in other languages to compare overall performance, but I do have a vectorized and non-vectorized version. The non-vectorized updates can be made fast enough for small world sizes. The vectorized version provided a significantly better tick rate even with a world 100 times the size used for testing (20 ticks per second at 1280×1280). See the numbers below.

The Lessons

1) The compiler can do a lot for you

Since Xcode 6.3 Swift’s compiler has had "whole module optimization", meaning that its optimization pass has knowledge about all the code in your project, not just the one file being compiled at that time. Turn it on and for a small tradeoff in build time see performance jump. My non-vectorized version was pitifully slow without whole module optimization.

2) The compiler can do even more if you help it, maybe†

Marking classes, methods, properties, etc. final or private should let the optimizer be more aggressive by doing away with more dynamic dispatch and inlining–something Objective-C cannot support as well without these kinds of annotations. Combine this with whole module optimization and you should have bumps in speed for doing the right thing and telling future maintainers what isn’t meant to be overridden.

3) It’s easy to get the bytes so existing optimized libraries are easy to use

I implemented a vectorized version using UnsafePointer to pass pointers into the Float arrays holding the world’s state into DSP functions in the Accelerate library. When combined with whole module optimization the app went zoom.

The Numbers

| Optimization | Non-vectorized | Vectorized |

|---|---|---|

| Baseline | 14 | 39 |

| Final Keyword | 15 | 39 |

| WholeModule | 195 | 1200 |

| WholeModule NoFinal | 210 | 1200+ |

Numbers are ideal ticks per second (1 / seconds per tick), not the actual rate at which I updated the world state. Ticks were triggered by a timer firing 30 times per second.

Measurements made with a 128×128 world, running a 64-bit build on a 2.2GHz i7 MacBook Pro with OS X 10.11, and compiled with Apple LLVM clang 7.3.0 in Xcode 7.3.

* It seems like it will be a while before I reliably remember to put the type after the variable name.

† I didn’t see any benefit to adding final to my classes and methods. There may have even been a drop in tick rate when I included final. More research is required.