At Art & Logic, I wear many hats … a likely consequence of having numerous interests: I am passionate about software and the development process; I am passionate about IT operations; I am passionate about information security, cryptography, privacy, and how those topics intersect when our customers seek our services. It does not surprise many of my colleagues to learn I have been closely following the legal battle between the US Dept. of Justice and Apple over an encrypted iPhone 5c … but they are often surprised by why. This case has potentially far-reaching implications for those of us who write software for a living. Equally, so might it affect our customers; companies who seek to use custom software for their own business goals.

Make No Mistake, This Is Not Just about One iPhone

The outcome of the legal debate around the case will not merely affect just the San Bernardino suspect’s iPhone. It will serve to shape legal precedent, policy, and very likely the form of ‘secure’ technology in the future. We build technology – frequently with necessary security properties requested by our customers.

If you have not been following the case, there are a couple of key facts that have emerged. One is that the work phone of suspect Syed Farook is currently protected by Apple’s OS using encryption and a four-digit PIN. That last bit is important: Neither Apple nor the FBI has the ability to undo the encryption on the phone without the key (which is partly derived from the user’s four digit PIN). Unfortunately, it appears that the key was only known by the deceased suspect. The case aside, it is a good thing that a person without the key cannot get into the phone. Encryption has so many subtle yet important uses in modern computing … if it were possible to trivially break it, our digital world could have a host of troubles. E-commerce and online banking, for example, are built on trust that our details for exchanging money are kept private between the parties involved in the transaction. Software updates for your phone, tablet, and computers rely on the ability to deliver them to the machine without tampering. Of course, there’s a desire to communicate privately between two people. All of these rely on encryption to accomplish their tasks… and require that we can trust that encryption in the first place.

But the FBI is not seeking for Apple to merely unlock the encrypted phone – Apple can’t provide that for them. The OS helps maintain the protection of the encrypted device’s key by making brute force attempts harder. The FBI wants Apple to make small changes to the system that eliminate or weaken those protections.This is again important because the password in question is only four digits; math works in the FBI’s favor if they can perform brute force attempts quickly and without any risk of harming the device. Ars Technica has an excellent expanded analysis on the specifics.

Here’s the thing. Cryptography is very difficult, complex, and subtle; small alterations can have far-reaching implications (and, as is often the case with complex systems, we cannot predict all the ways a small change might play out). This is why implementing one’s own encryption algorithm or designing a secure protocol is nearly universally discouraged; at least, not without the help of professionals specializing in cryptography and information security topics.

The FBI claims that what they seek will only be applied to this one phone, for this one case. The catch is that these changes cannot meaningfully be restricted to just the iPhone in the San Bernardino case – not technically nor as a matter of legal precedence.

As noted cryptographer Bruce Schneier describes it in this blog post on Decrypting an iPhone for the FBI, “the hacked software … would be general. It would work on any phone of the same model. It has to. Make no mistake; this is what a backdoor looks like.” Many are concerned that if you create this special software, Pandora’s box will be opened … and it will be very difficult to keep lids on it later … or even put it back. A physical thing is worlds easier to keep tabs on; but as recent wide-spread data hacks affecting US Government agencies show (OPM data breach, IRS data breach, 2015), it can be very difficult to protect digital data from unauthorized persons. Even the Snowden revelations can be viewed in this exact light: an authorized person can become an unauthorized person quickly if they abuse the access they’re given. A hacked version of iOS would be a worthy prize for those who wish to abuse it.

The Risks of Weakened Cryptography

We’ve seen what happens when governments force weakened cryptography in order to assist their policing and intelligence efforts. A policy that dates back to the early 1990s required that any cryptography exported outside of the US have intentionally weakened cryptography. Fast forward to a rapidly evolved “today”; these same weak ciphers were practically unused but still lingered around, inactive, because it was easier to merely turn them off then to remove them from software products. A team of researchers discovered a flaw dubbed FREAK in 2015 that allowed them to trick implementations of SSL/TLS protocols into using old, weak crypto left over from this antiquated government policy. It was later shown that large categories of software (including Microsoft Windows, Apple’s Safari web browser, Google Chrome in Android, others) could be tricked into using breakable cryptography.

Intentionally writing flaws into a software is a slippery slope – one that most software developers would consider an ethical problem. When that flaw has the clear purpose of compromising the privacy of other human beings or harming them, it quickly elevates to a moral dilemma.

Helping the government peer into a terror suspect’s privacy is likely not a difficult question for most Americans. I believe it to be a safe bet that nearly all are like me – and want justice for the San Bernardino victims. But the question stands whether the pursuit of justice at the likely expense of personal liberty is itself justified. This case will establish a legal precedent; a set of outcomes that will undoubtedly extend to other cases and other situations none of us have pondered yet.

Computer Code and the First Amendment

Consider one special wrinkle: in an interview with NPR, Richard Clarke, a former national security official, pointed out that US Courts have ruled in a direction that supports ‘computer code’ as speech. Speech – which is protected by the First Amendment of the US Constitution. So what, you might ask?

Whether the US Government is allowed to compel speech is a sticky issue for the Constitution and the US legal system. Is the US government allowed to compel a private citizen to write code to which they morally object – say, write code that can directly harm another human? Can they compel a private company to write code that directly harms their business model or the business model of a competitor or another business? This later question is at the heart of the debate around the FBI’s use of the 1789 All Writs Act. Should Apple be compelled to undermine the security of their own products – in the pursuit of justice for terror victims? At other times?

These are not just questions for the US Government either. If the FBI succeeds in compelling Apple to change its software under US jurisdiction, China, and other governments that have been outwardly hostile to dissidents and political activists will have the support they need to force Apple to do similar things within theirs. (In truth, they don’t necessarily need the FBI to succeed … but success will undoubtedly support their cause)

We strive hard to do what’s best for our customers at Art & Logic. As I am an information security practitioner, this means I work to help set healthy expectations for our customers about what technology can do to protect what they feel is important. If our government involves itself in dictating the form of those security measures, it will make that job much harder. The opinions of experts in this field are clear: encryption cannot be eschewed in such a way that only lawful holders of a properly executed warrant might get around it … in a way that does not also open the path for those who would illegally misuse it. For private citizens to have privacy in a digital age, they must be the sole holders of the keys.

Unfortunately, we must wait to see how the courts resolve the legal battle between Apple and the Justice Department. Personally, I hope the courts heed what many experts in the Info Sec field state loudly: forcing weaker tech protections diminishes security for us all in a time where there has never been more capacity for the widespread invasion of privacy (by governments and by illicit persons).

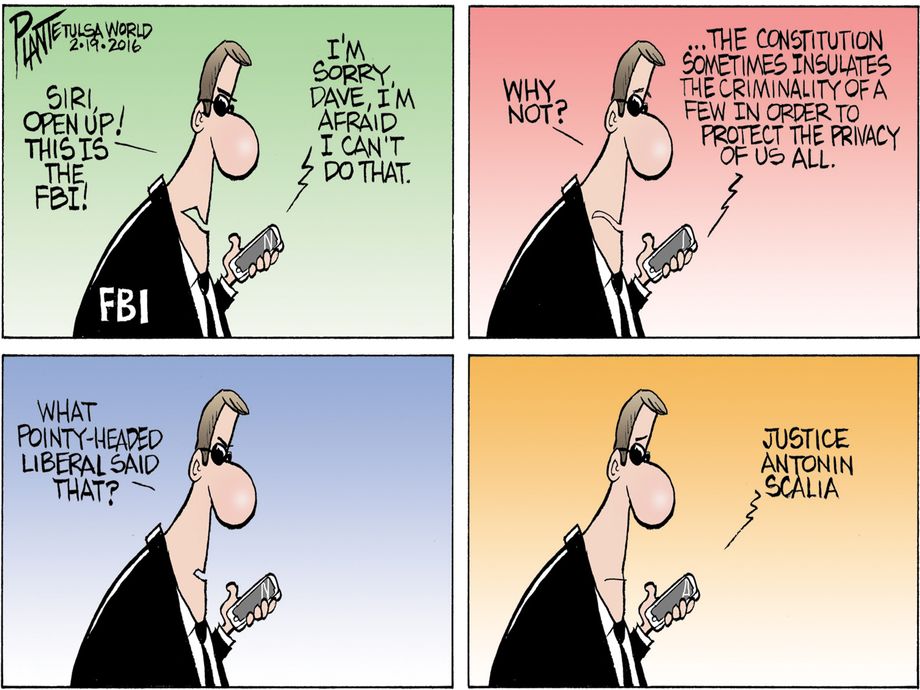

Bruce Plante captures a quote from the late Justice Scalia which is as relevant in this case as when he stated it in 1987:

Bruce Plante World Editorial Cartoonist

Tulsa World. Feb 20, 2016.

Update: Things move pretty quickly in these types of cases. The Department of Justice has indicated that it may no longer need assistance from Apple in unlocking the phone thanks to a third party. In some ways, this is a good thing. The FBI will likely have access to what it needs for its case. Apple won’t be compelled to do something against its customers (for now) … though it may have a critical issue in its encryption that they’ll want to fix. But most of the issues in this post have not gone away. Another case may find the DOJ asking for the same thing, so this “crypto war” is far from over.